Vectors and Matrices

Neural Networks From Scratch lecture notes 00

Hey there!

Welcome to the first lesson of the Neural Networks from Scratch course, where we'll build a fully functional tensor library (with automatic differentiation and all) in NumPy, mastering the inner workings of neural networks meanwhile.

Before we dive deep into the meaty parts and start building computational graphs from left and right, we'll take a brief look at the building blocks of machine learning: vectors and matrices.

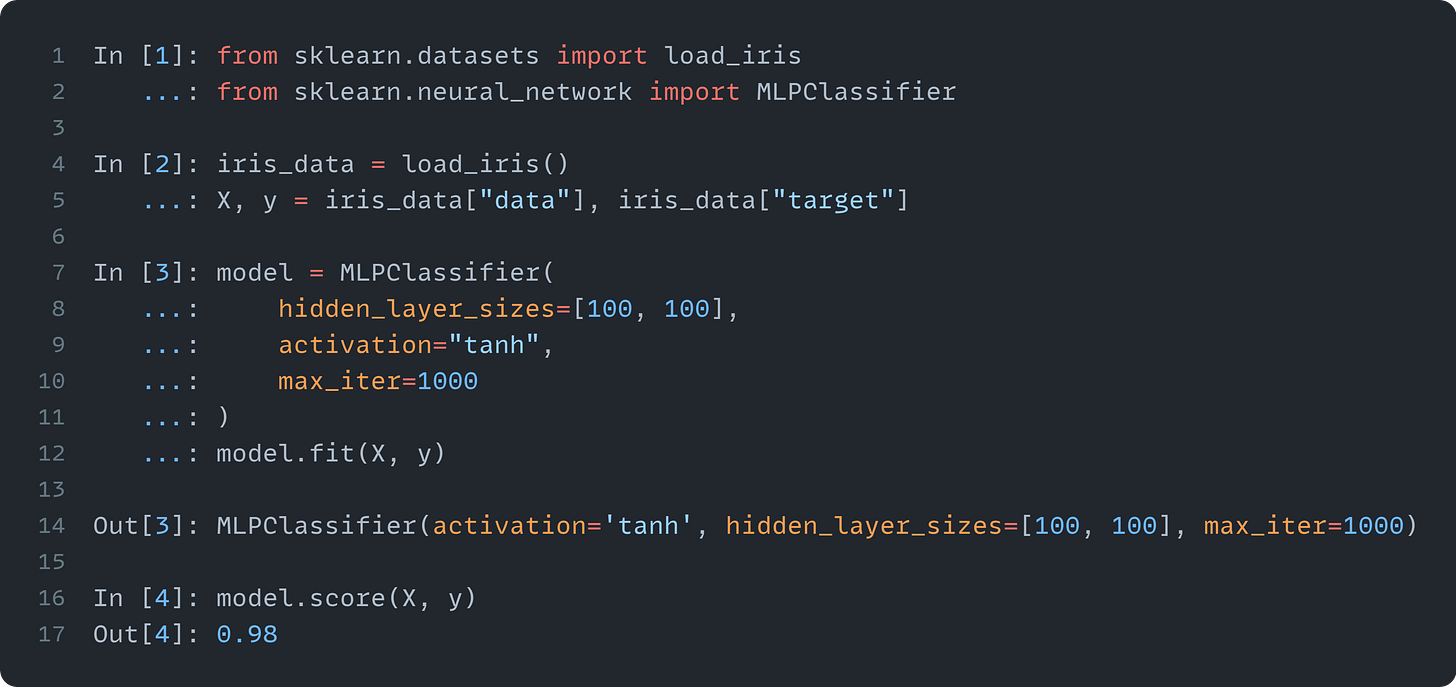

To see what I'm talking about, let's take a look at machine learning from a high level. Training a model is simpler than you think: it can be done in just six lines, including loading the data.

No matter where we come from, this is fascinating. Modern frameworks (such as scikit-learn and PyTorch) hide a ton of complexity from the user, allowing us to build and experiment faster than ever. However, this comes with a price. Pre-built solutions can take you so far, but to master machine learning, you must understand how the algorithms work.

This course is about what's behind the hood. We'll take these six lines, deconstruct them, and rebuild everything from scratch.

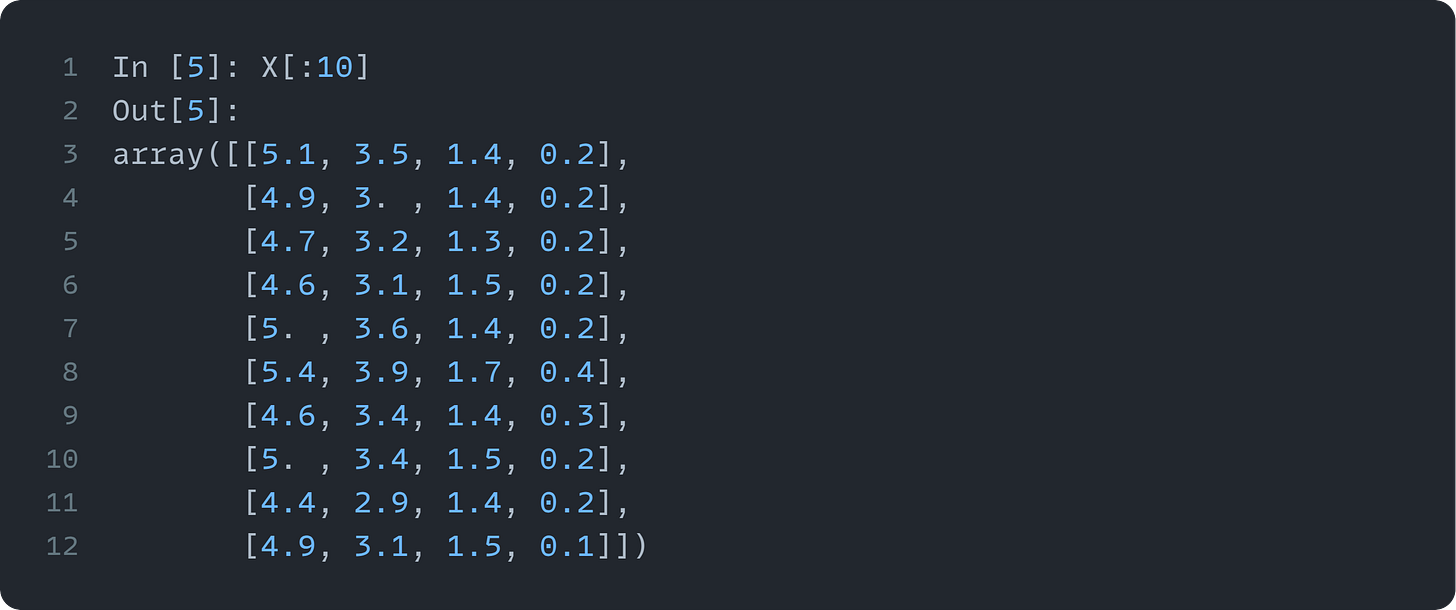

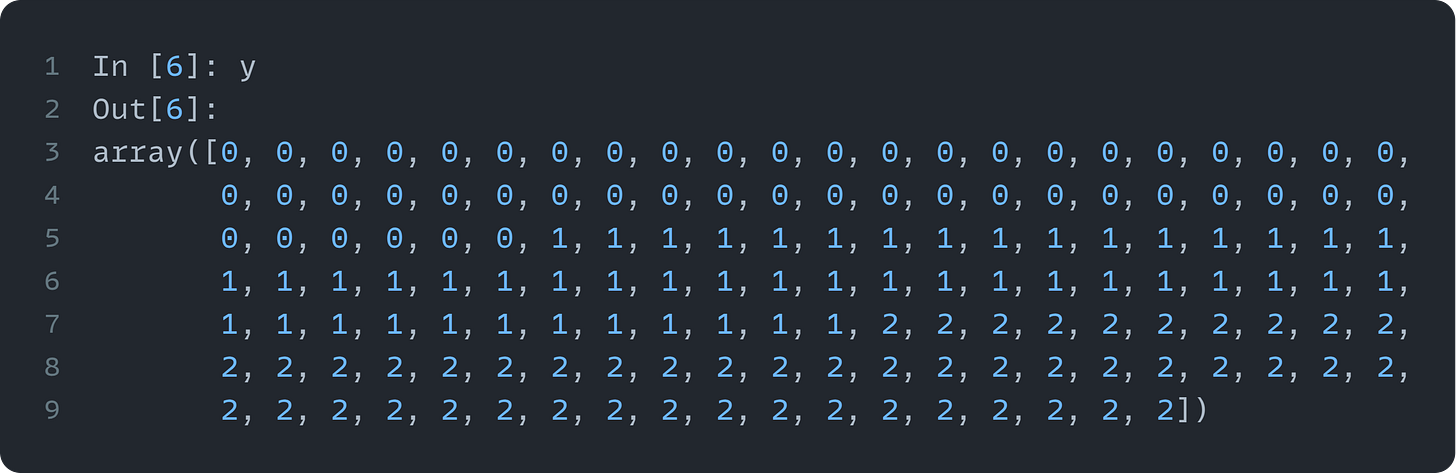

We'll start with the data, that is, the X and y objects. Let's take a look!

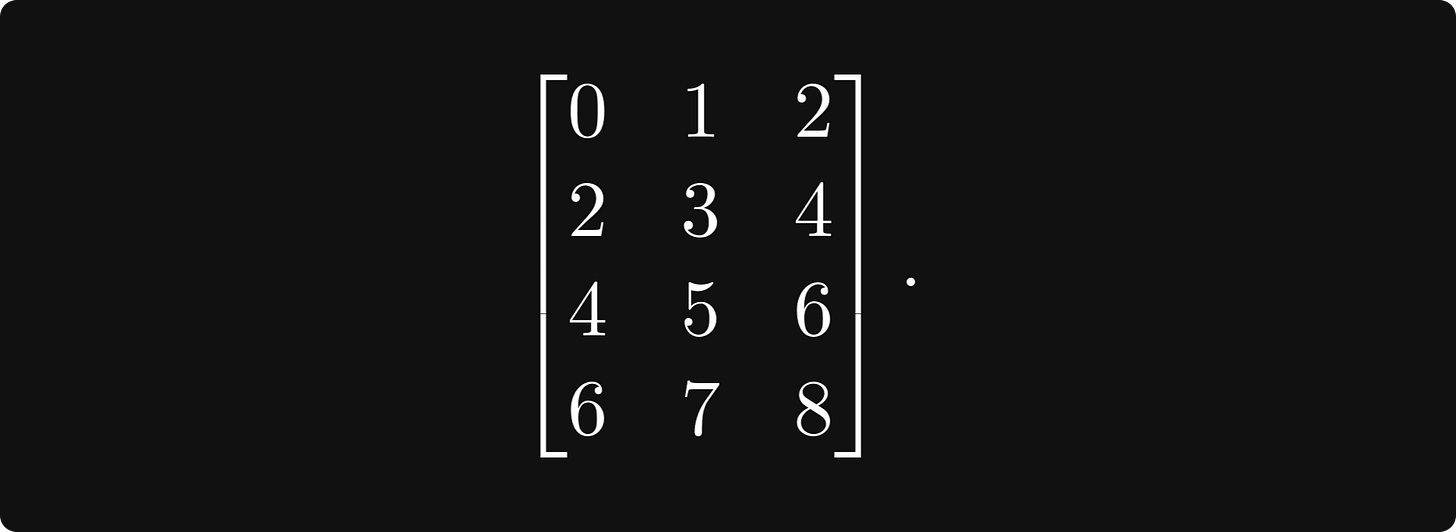

These are known as vectors and matrices. Intuitively, we can think about vectors as tuples of numbers, like (-1.2, 3,5, π), and matrices as tables of scalars, like

What truly gives vectors and matrices their power is not just their structure, but their operations. We can add and scale vectors, as well as add, scale, and multiply matrices.

Let's start with vectors first.

Keep reading with a 7-day free trial

Subscribe to The Palindrome to keep reading this post and get 7 days of free access to the full post archives.