The taxonomy of machine learning paradigms

Setting the stage for learning problems

Hi there! This post is the next chapter of my upcoming Mathematics of Machine Learning book, available in early access.

New chapters are available for the premium subscribers of The Palindrome as well, but there are 40+ chapters (~450 pages) available exclusively for members of the early access.

To look behind the curtain of machine learning algorithms, we have to precisely formulate the problems that we deal with. Three important parameters determine a machine learning paradigm: the input, the output, and the training data.

All machine learning tasks boil down to finding a model that provides additional insight into the data, i.e., a function f that transforms the input x into the useful representation y. This can be a prediction, an action to take, a high-level feature representation, and many more. We'll learn about all of them.

Mathematically speaking, the basic machine learning setup consists of:

a dataset D,

a function f that describes the true relation between the input and the output,

and a parametric model g that serves as our estimation of f.

Remark. (Common abuses of machine learning notation.) Note that although the function f only depends on the input x, the parametric model g also depends on the parameters and the training dataset.

Thus, it is customary to write g as g(x; w, D), where w represents the parameters, and D is our training dataset.

This dependence is often omitted, but keep in mind that it's always there.

We make no restrictions about how the model g is constructed. It can be a deterministic function like g(x) = -13.2 x² + 0.92 x + 3.0 or a probability distribution g(x) = P(Y = y | X = x). Models have all kinds of families like generative, discriminative, and more. We'll talk about them in detail; in fact, models will be the focal points of the next few chapters.

First, let's focus on the paradigms themselves. There are four major ones:

supervised learning,

unsupervised learning,

semi-supervised learning,

and reinforcement learning.

What are these?

Supervised learning

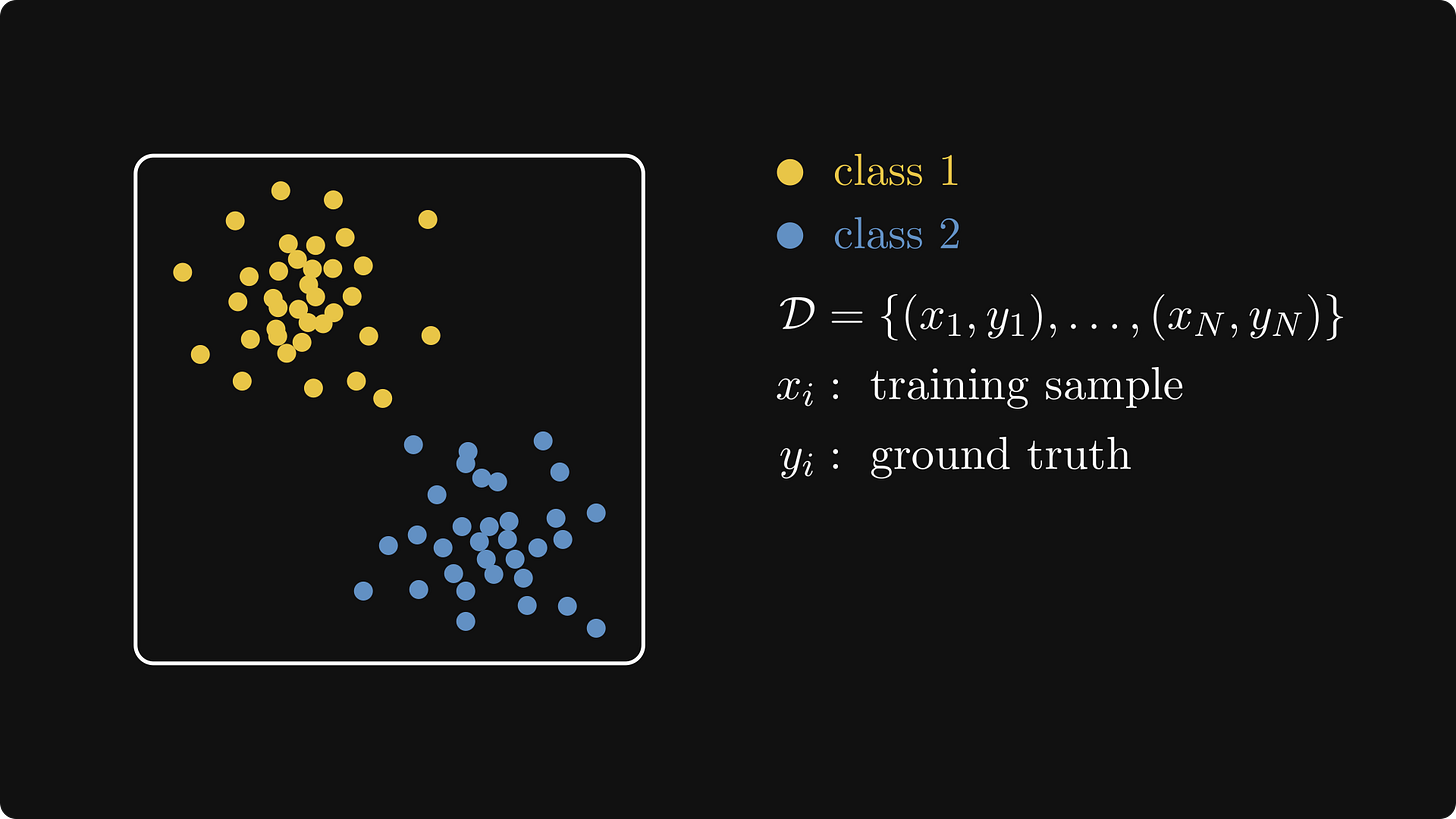

The most common paradigm is supervised learning. There, we have inputs xᵢ and ground truth labels yᵢ that form our training dataset

Although the labels can be anything like numbers or text, they are all available for us. The goal is to construct a function that models the relationship between the input x and the target variable y.

Here are the typical unsupervised learning problems.

Example 1. You are building the revolutionary "Hot Dog or Not" mobile app, aiming to determine if the phone's camera is pointed towards a hot dog or not, That is, your input is an n × m matrix X (where n and m represent the dimensions of the image), and the output is a categorical variable y ∈ {hot dog, not hot dog}.

Example 2. You are a quant, tasked to predict the future price of Apple stock, based on its price history last week. That is, the input is a seven-dimensional vector x = (x₁, …, x₇), and the output is a real number y.