Probabilities, densities, and distributions

Setting common misconceptions straight

Raise your hands if you have seen something like this before.

This is the so-called standard normal distribution, and the above expression is often referred to as a “probability”.

Contary to popular belief, this is NOT a probability. I have seen this mistake in so many times that it prompted me to write my early Twitter threads about probability, from which my Mathematics of Machine Learning book grew out.

So, I take this issue to heart.

In this post, we’ll talk about what this object is, and why it is essential to study random variables and do statistics.

Probability 101

I could talk days weeks months about probability. This is easily one of my favorite subjects in mathematics, and its precise theoretical development is beautiful.

However, to spare you all the lengthy measure-theoretic discussions, here is a really quick introduction.

Probability is a function P(A) that takes an event (that is, a set), and returns a real number between 0 and 1. The function P is also called a probability measure.

The event is a subset of the so-called sample space, a set often denoted with the capital Greek omega Ω.

A probability measure must satisfy three conditions: nonnegativity, additivity, and the probability of the entire sample space must be 1.

These are called the Kolmogorov axioms of probability, named after Andrey Kolmogorov, who first formalized them.

Directly working with probability is hard. Most often, we interact with probabilities and events through random variables. Let’s see what are those!

Random variables

When dealing with observations under uncertainty, we are interested in quantitative measurements from our experiments. Say, we are modeling the lifespan of lightbulbs.

These measurements are called random variables, and they are one of the principal objects of probability theory.

Mathematically, a random variable is a function that maps the sample space Ω to the set of real numbers. If you are having trouble imagining this, here is an illustration for you.

There is a serious issue with this definition: sample spaces and random variables are way too abstract to be easily used in practice. For instance, “lifespan of the lightbulb“ is not an exact mathematical formula, and thus, we can’t perform a quantitative analysis with it.

What’s the cure for this problem?

Using the random variable X to map the probability measure P from Ω to the set of real numbers.

Distribution functions

Most often, we are interested in questions like the probability of X falling into a given range, say, my lightbulb will live less than a year.

As it turns out, knowing these probabilities is all we need to study a random variable.

To be mathematically precise, we are looking at the so-called level sets.

I know, this is hard to even look at, let alone understand. So, here is a visualization for you.

Elements from the level set are mapped to the range (-∞, x] by X.

The probabilities of the level sets encode all relevant information about the random variable. If we view x as a variable, these quantities can be used to define a real function. One that we can use as a proxy for the actual random variable.

This function is called the cumulative distribution function (or CDF in short), and it’s a cornerstone of probability theory and statistics.

Here is the precise definition.

(Note that “X ≤ x“ inside the argument of the probability measure is an abbreviation for the level set. It’s much more concise, so this notation is used in practice.)

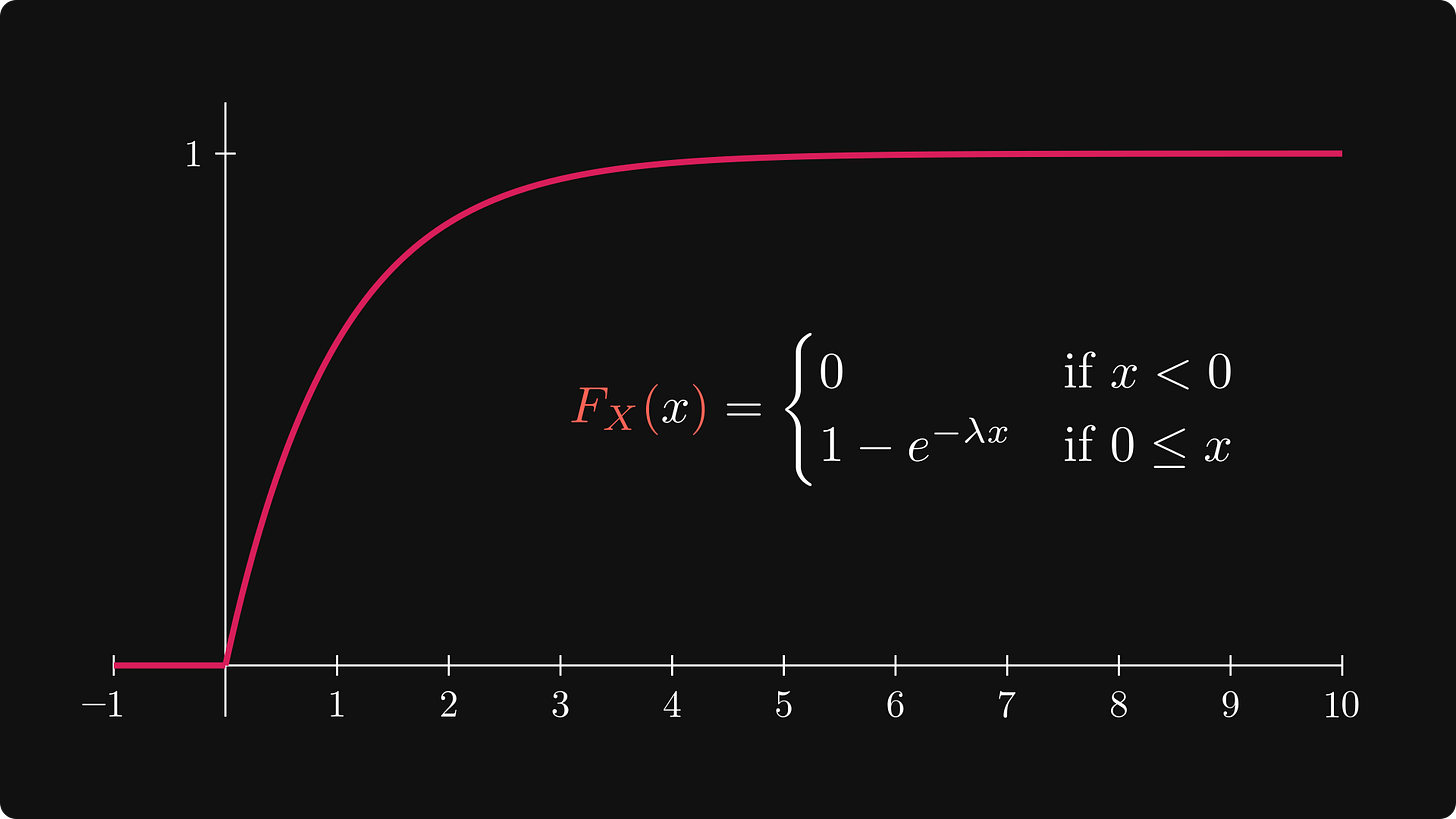

For instance, the lifespan of a lightbulb is said to be exponentially distributed, with the following CDF.

(The λ is a parameter of the distribution.) This is what the exponential distribution looks like.

There are tons of other probability distributions: uniform, Gaussian, Pareto, Weibull, Cauchy; the list goes on forever.

However, cumulative distribution functions have a significant drawback: they only carry global information by default, but not local. Here’s what I mean.

Density functions

Let’s talk about our random variable X once more. Recall that X is the lifespan of our lightbulb.

What is the probability that the lightbulb goes out exactly after x seconds? In other words, we are looking for the quantity P(X = x).

A brief calculation shows that it is actually zero, which is quite surprising.

In other words, the probability of each outcome is zero, without exception. Yet, the exponentially distributed X is far from that boring.

This is not only true for the exponential distribution. It is the same for the uniform, Gaussian, Pareto, and many others.

Isn’t it strange?

There is an explanation. We can simply express the probability of X falling into the segment (a, b] with the increments of the CDF. See the formula below:

Increments of the CDF on the left, the probability of X falling into the segment (a, b] on the right.

Does this look familiar?

Upon closer inspection, this formula shows a close resemblance with the fundamental theorem of calculus.

Increments of the function F on the left, integral of its derivative on the right.

Thus, the function on the right

represents the distribution function via integration,

and conveys local information about the random variable,

which is exactly what we wanted! This function is so special that it has its own name: it is the probability density function (or PDF in short) of the random variable X.

The precise definition goes like this. If there exists a function such that its integral over the interval [a, b] equals the increment of the cumulative distribution function there, then it is called a density function of X.

If the CDF is differentiable, its derivative is a density function.

Note the article “a” in the previous sentence, instead of “the“. That’s because density functions are not unique. Think about it: if you modify its value at a single point, the integrals will be unchanged.

Another thing to note that the everywhere-differentiability of the CDF is not necessary for the density function to exist.

To see some examples, here is the density function of our recurring example, the exponential distribution.

(Again, note that the exponential CDF is not differentiable at 0, yet it is differentiable everywhere else, and the derivatives there give the PDF.)

This is how it looks on a plot.

You can imagine the density as a “terrain map” of the probability distribution.

Even though the probabilities of individual outcomes are zero, the density function clearly shows that as x grows, it gets more and more unlikely.

As the value of the integral corresponds to the area under the curve, this gives a new interpretation of the probabilities.

The normal distribution

Let’s revisit our starting example: the bell curve. This is known as the density of the standard normal (or Gaussian) distribution.

Its density is concentrated around zero, and it vanishes as we move farther from it.

One thing is for sure: it is definitely not a probability.

In this case, the density function is even more important. Why? Because the cumulative distribution function of normal distributions cannot be expressed in a closed form, only as an integral.

It’s not that mathematicians are not clever enough to do it: no such formula exists, and we can prove this. (You have to trust me on this; it’s way beyond the scope of the current post.)

Conclusion

Let’s sum up what we have learned so far.

Even though random variables are the central objects of probability theory and statistics, it is very hard to work with them directly. The reasons are twofold. First, the sample space Ω can be quite abstract; and second, random variables often don’t have tractable formulas, just vague descriptions like “lifespan of a lightbulb“, “weight of a person“, or something similar.

To cut the Gordian Knot, we have introduced cumulative distributive functions, a tool that transfers random variables from an abstract state space to the set of real numbers.

Distribution functions are also not enough, as they don’t carry local information about the random variable’s behavior at a random real number. At least, not on the surface.

But their derivative does, leading us to the concept of probability density functions. These are often conflated with probabilities, but they are not. Rather, they describe the distribution’s rate of change, giving us its “terrain map”.

Nowadays, I am writing a lot about probability for my upcoming Mathematics of Machine Learning book. I release the chapters as I finish them, and last time, it was about distribution functions. Next time, it’s all about the density, which inspired me to write this post.

Check it out if you are interested in the fine details!

The older I get, the more I love math. Thx.

It's a probability density