Introduction to Computational Graphs

Neural Networks From Scratch, Part I

In computer science and mathematics, constructing a new representation of an old concept can kickstart new fields. One of my favorite examples is the connection between graphs and matrices: representing one as the other has proven to be extremely fruitful for both objects. Translating graph theory to linear algebra and vice versa was the key to numerous hard problems.

What does this have to do with machine learning? If you have ever seen a neural network, you know the answer.

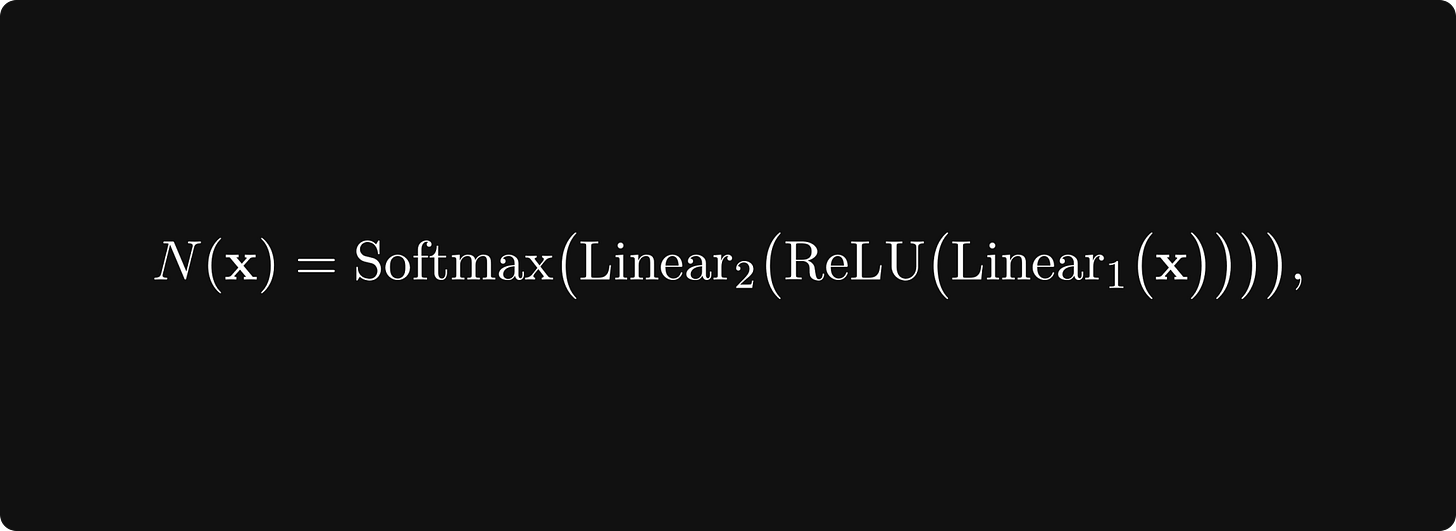

From a purely mathematical standpoint, a neural network is a composition of a sequence of functions, say,

whatever those mystical Softmax, Linear, and ReLU functions might be.

On the other hand, take a look at this figure below. Chances are, you have already seen something like this.