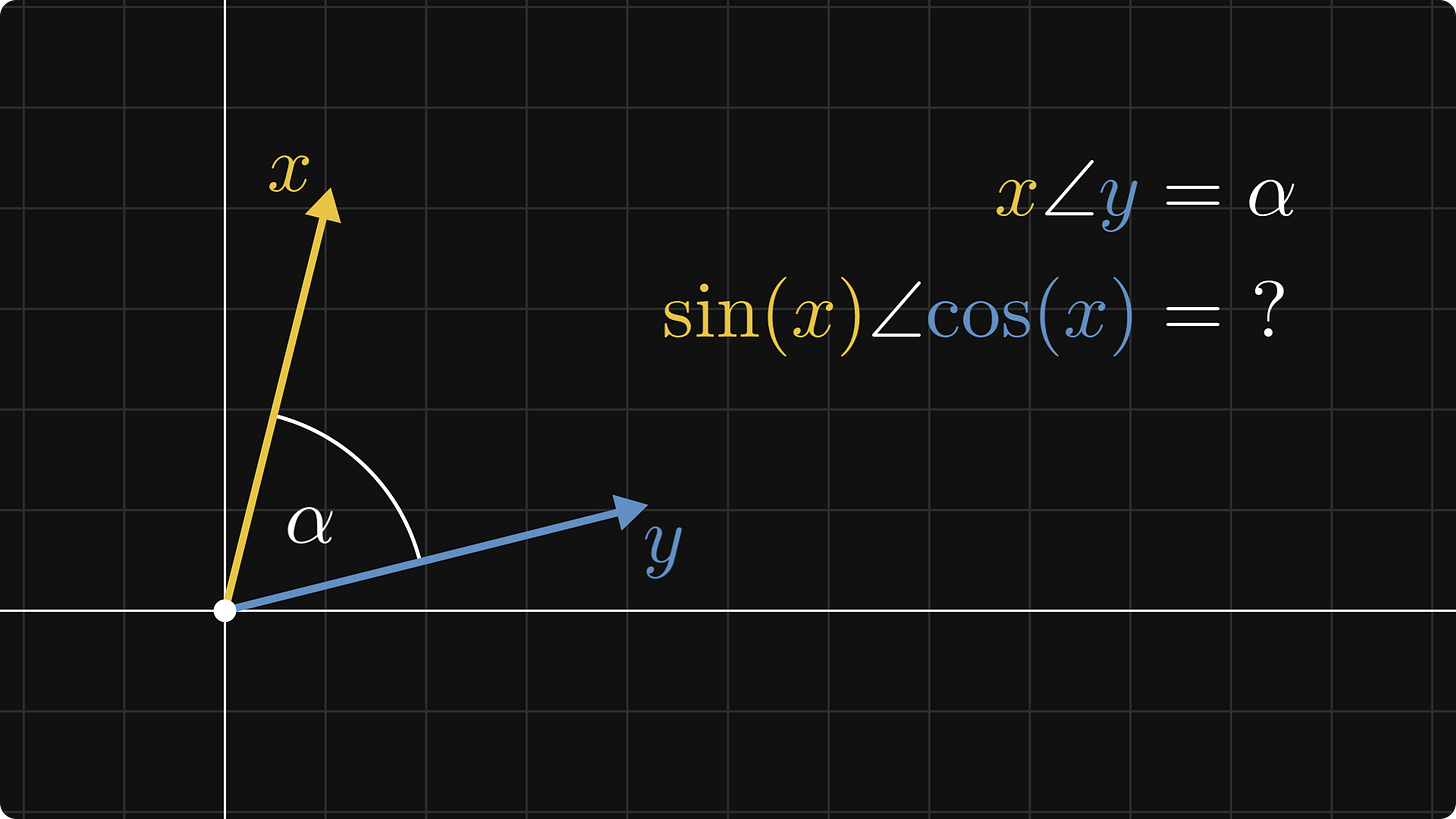

How to measure the angle between two functions

Extending the definition of angles and orthogonality beyond Euclidean spaces

This will surprise you: sine and cosine are orthogonal to each other.

Even the notion of the enclosed angle between sine and cosine is unclear, let alone its exact value. How do we even define the angle between two functions? This is not a trivial matter. What’s intuitive for vectors in the Euclidean plane is a mystery for objects such as functions.

This week, we’ll dive deep into the concept of angles and orthogonality. Generalizing them beyond the Euclidean plane makes technologies such as telecommunication and image compression work. There are hardly any areas of physics where orthogonal basis functions are not used.

The recipe for generalization is simple. Take a familiar concept, pinpoint its core properties, then turn it upside down and use those properties to define it. This is how we’ll go

from geometric vectors to function spaces,

from dot product to positive, symmetric, bilinear functionals,

and from 90° angles to zero inner products.

Let’s get to it!

Vectors from a bird’s eye view

We start by looking at the simplest representation of vectors: arrows on the Euclidean plane. One key discovery of Descartes was that points in space can be represented by arrows starting from the origin. These are called vectors.

Descartes’ idea revolutionized science. It's like the invention of the wheel, but for math. Translating points into tuples of numbers enabled us to

replace geometry with algebra,

and give quantitative instead of qualitative models.

But what makes a vector?

Two things: addition and scalar multiplication.

To be more precise, we call a set a vector space, if we can add its elements together, multiply them with scalars, and these operations behave nicely. Vectors are the elements of vector spaces.

If you need a refresher, check out the formal definition!

The simplest vector space is the Euclidean plane, one that you are familiar with. However, the concept of a “vector” is much more general. Any mathematical object can be a vector if we can find a way to add and scale them.

Here is a surprising example: functions!

Functions as vectors

There are several ways to define vector spaces of functions, so we will stick to the simplest one. Let’s consider all functions that are

defined in the interval [-π, π],

and their square is integrable.

(We’ll see why we require the integrability of the square, instead of integrability.)

If you are having trouble imagining this, feel free to think about functions that are continuous on [-π, π]. For example, the famous trigonometric functions sine and cosine belong here.

With addition and scalar multiplication, these functions form a vector space. We call this the L² space.

Function spaces such as L² have a stunningly rich structure. For instance, as opposed to Euclidean spaces, function spaces are (typically) infinite-dimensional.

The question remains: how can we define the enclosed angle between two functions? We’ll see this next.

The notion of angle

According to Wikipedia, an angle “is the figure formed by two rays, called the sides of the angle, sharing a common endpoint, called the vertex of the angle”. In the context of vector spaces, these sides are the vectors themselves, while the vertex is the origin.

How do we measure the angle? As we want to be precise, angle meters are not an option. (Angle meters are also limited to our physical world. As we are about to see, vector spaces are infinitely richer.)

In the Euclidean plane, we have the perfect tool: the inner product. (Also called the dot product.)

The Law of cosines shows that the inner product equals the product of magnitudes and the cosine of the angle.

(Some courses even define the inner product in this form.)

From this geometric form, we can express the angle of two vectors. In fact, this can be the definition of the angle!

We are getting closer. Can’t we translate the inner product to function spaces and use it to define the angle? We can, and we shall do this next.

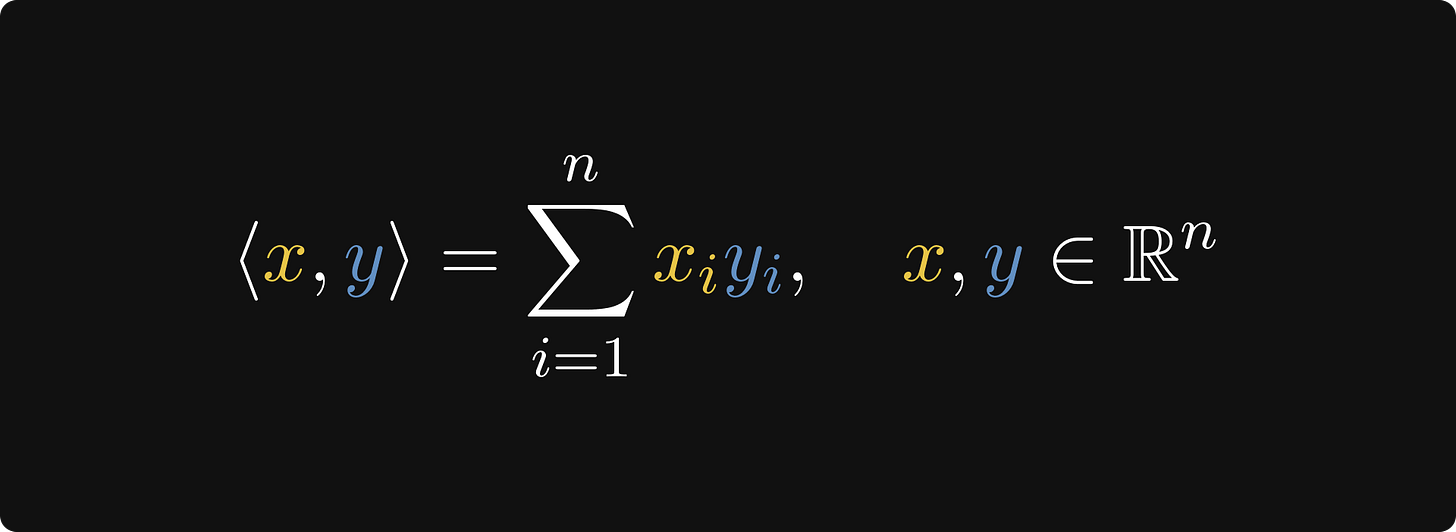

The general inner product

We have distilled our intuitive understanding of the Euclidean plane into the general concept of vector spaces. We can do the same with inner products by asking the right questions.

What makes the inner product? Three properties:

symmetry,

positivity,

and bilinearity.

All the downstream theorems rely on these three properties. Instead of providing an explicit formula, we define inner products in terms of their core properties.

Vector spaces equipped with an inner product are called inner product spaces.

The familiar inner product in the Euclidean plane extends to n-dimensional spaces as well. This is a straightforward generalization, but by satisfying the properties 1)-3), we can see that the definition is a proper one.

There is not just only one inner product, there are countless variations. For instance, certain special matrices can be used to construct a family of inner products. (In fact, all inner products for n-dimensional vectors can be written in this form.)

The inner product for the L² function space

The question remains: what about function spaces such as L², our guiding example? There, a natural idea is to use the Riemann integral.

I’ll explain why this is a perfectly natural idea.

Intuitively speaking, the integral is the signed area between the graph and the x-axis. (Signed means that when the function goes below the x-axis, the area is negative.)

We can approximate the shape under the curve by a series of columns, for which we can compute the area easily.

Thus, the integral is approximated by simple sums.

What you see in the approximating sums is the familiar inner product from the Euclidean spaces. (In a sense, the Riemann integral is a continuous sum.)

If the subdivision x₁, x₁, …, xₙ is equidistant (that is, the distances between neighbors are the same), we can find the similarity with the n-dimensional Euclidean inner product. This is why the L² inner product is the natural generalization of the Euclidean one.

The magnitude and the inner product

One more thing before we move on. Inner products can be used to define the notion of magnitude and distance!

Consider the case of the familiar Euclidean plane.

Once more, we can use this connection to define norms on vector spaces equipped with an inner product! (Norm is just the fancy mathematical terminology for magnitude)

From the norm, the notion of distance follows.

This is applied not just to the Euclidean plane, but to all inner product spaces, such as L².

With this under our belt, we are ready to turn towards the crux of this post: orthogonality.

Orthogonality

Our intuitive understanding of orthogonality is simple. Two vectors are orthogonal if their enclosed angle is 90° or π/2 radians.

Inner products shed new light on this interpretation. With them, we can see that a perpendicular angle is equivalent to a zero inner product.

Thus, we are free to define orthogonality of two vectors by the vanishing of their inner product! This works in any inner product space, not just on the plane.

With this, we can talk about orthogonality in a much wider setting. Say, in function spaces.

Translated to the L² space, orthogonality is equivalent to the following.

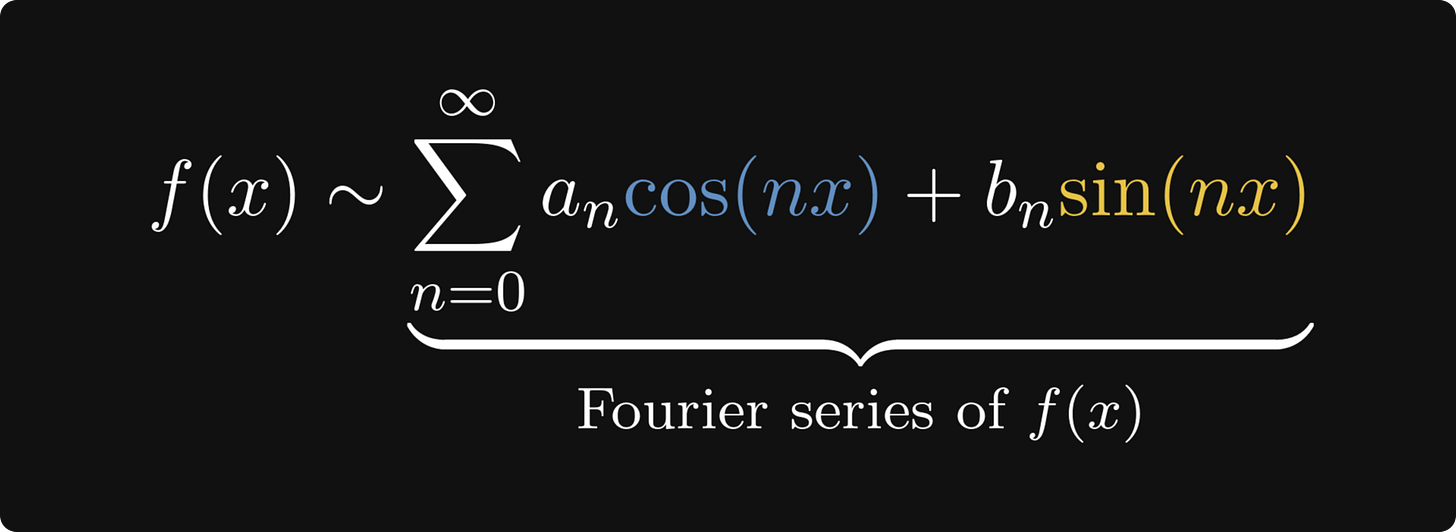

Now we can see why the cosine and sine are orthogonal! Their product is an odd function, that is, cos(-x)sin(-x) = -cos(x)sin(x). As such, the signed area below the curve is zero.

This fact, albeit interesting, seems nothing more than a mathematical curio. I can assure you, this is far from the truth. Have you ever heard about the Fourier series? It’s a milestone akin to the invention of calculus. The gist is this: under certain conditions, functions can be written as the sum of sines and cosines.

Fourier series are behind a staggering number of breakthroughs in sciences. They work exactly because of the orthogonality of trigonometric functions. This is going to be our topic for next week!

Conclusion

On the surface, this post is about angles and orthogonality. In reality, it’s about a quintessential mathematical thinking process: abstraction.

The notion of angles and orthogonality is frequently used in geometry, physics, engineering, and other mathematical disciplines. Yet, their real definition is far from intuitive. What is an angle and how do we even measure it? What are the vectors whose enclosed angles we want to measure?

To answer these questions, we must strip away all the details until only the skeleton remains. An arrow is not the definition of a vector, it is just a model of it. When the core properties are identified, we find a set of axioms that cannot be taken away without altering the nature of our object.

This is abstraction. With it, we can treat functions as vectors and transfer knowledge from one field to the other. Such ideas are extremely powerful.

Thanks again for the well explained article! The best insights for me is explaining why is it natural to define the inner product of function that way. However, is it possible to elaborate on why having discovered that cos(x) and sin(x) are orthogonal to each other helps the discovery of Fourier transformation?