Epsilons, no. 5: The QR decomposition

matrices + the Gram-Schmidt process = magic

I start all my posts about matrix decompositions by saying that “matrix factorizations are the pinnacle results of linear algebra“. These few words perfectly express their place in mathematics, science, and engineering: it is hard to overestimate the practical importance of matrix decompositions.

Behind (almost) every high-performance matrix operation, there is a matrix decomposition. This time, we’ll look at the one that enables us to iteratively find the eigenvalues of a matrix: the QR decomposition.

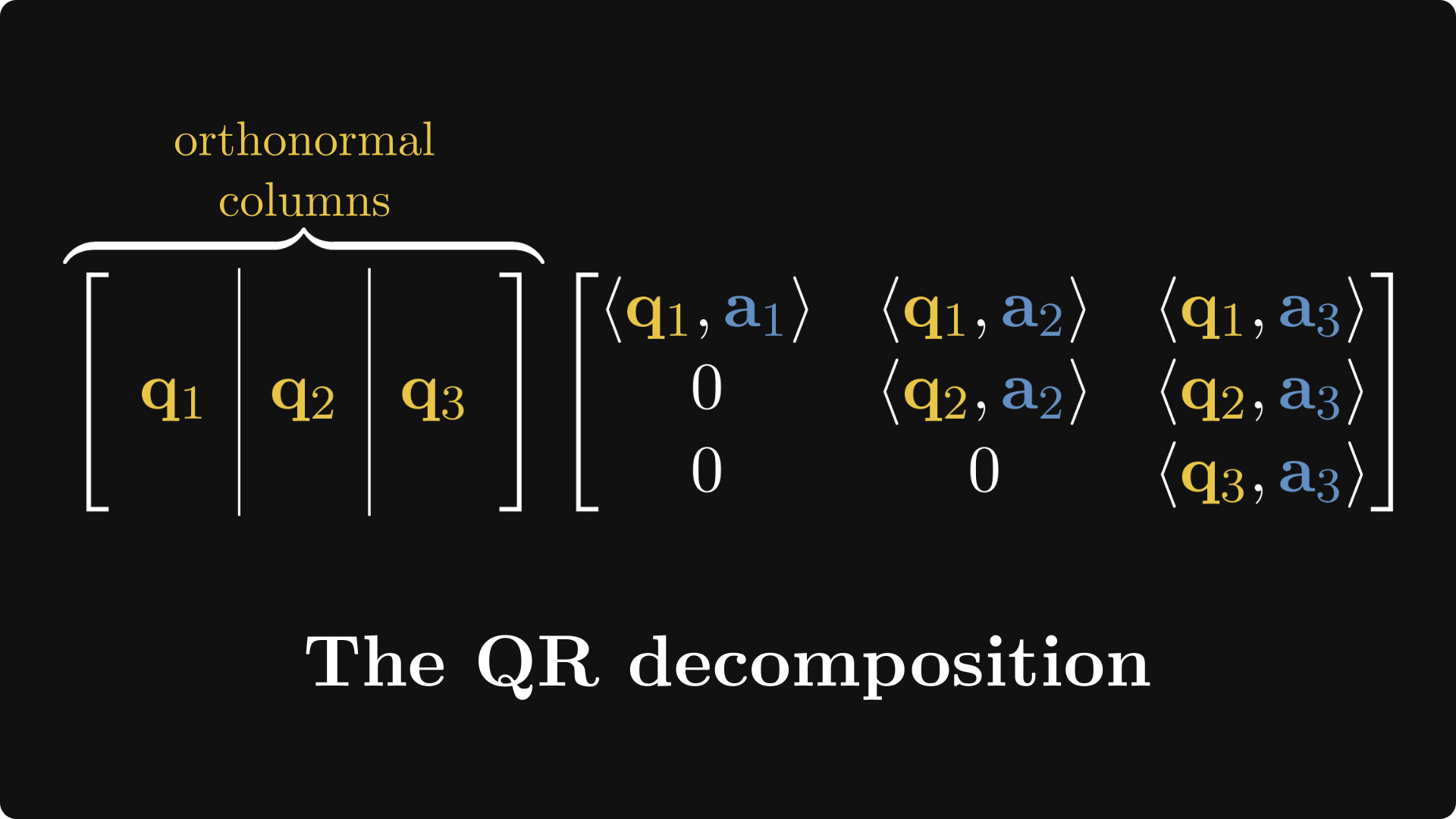

In essence, the QR decomposition factors an arbitrary matrix into the product of an orthogonal and an upper triangular matrix.

How does it work? We’ll illustrate everything with the 3 x 3 case, but everything works as is in general as well.

First, some notations. Every matrix can be thought of as a sequence of column vectors. Tru…