Building an interface for machine learning

Laying the foundations of our handmade machine learning library

This post is the next chapter of my upcoming Mathematics of Machine Learning book, available in early access.

New chapters are available for the premium subscribers of The Palindrome as well, but there are 40+ chapters (~500 pages) available exclusively for members of the early access.

Until this point, we have focused on the theoretical aspects of machine learning without spending time building a modular interface.

Linear and logistic regression are so simple that we can easily get by with a few Python functions, but the code complexity rapidly shoots up with model complexity. Defining and training a neural network in the good old procedural style is a nightmare.

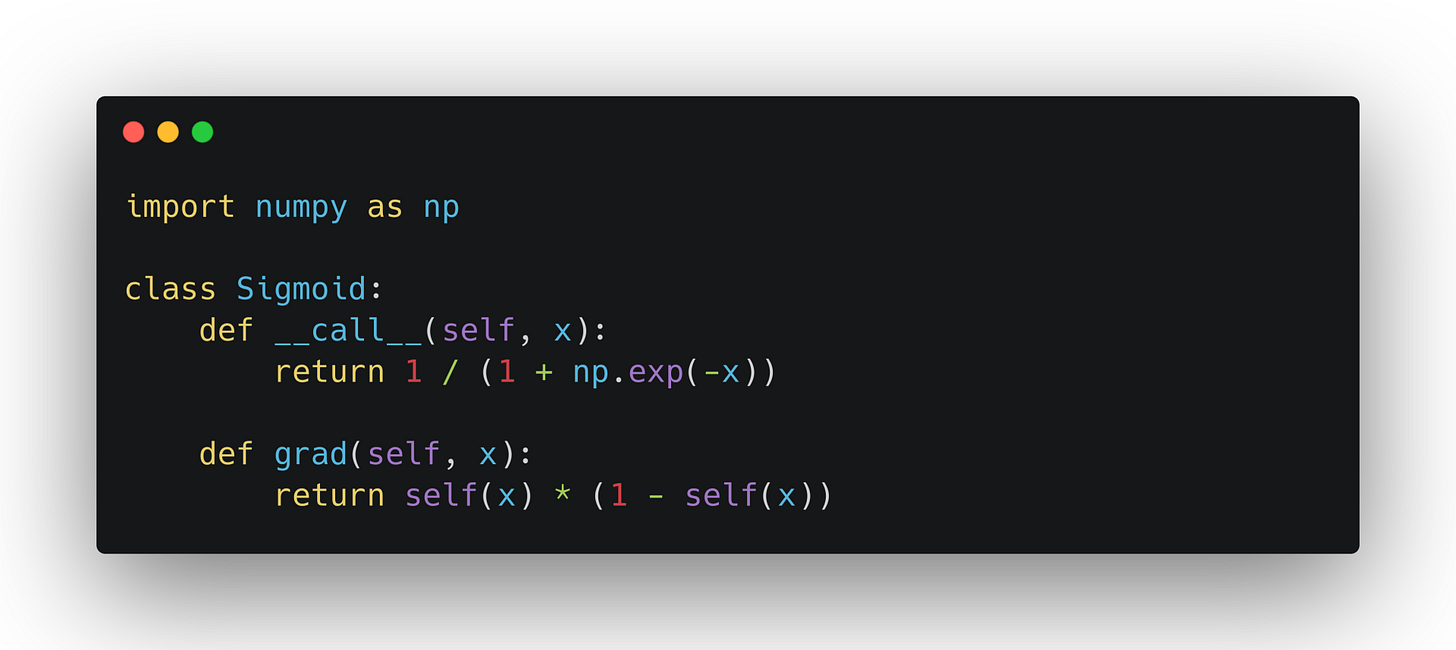

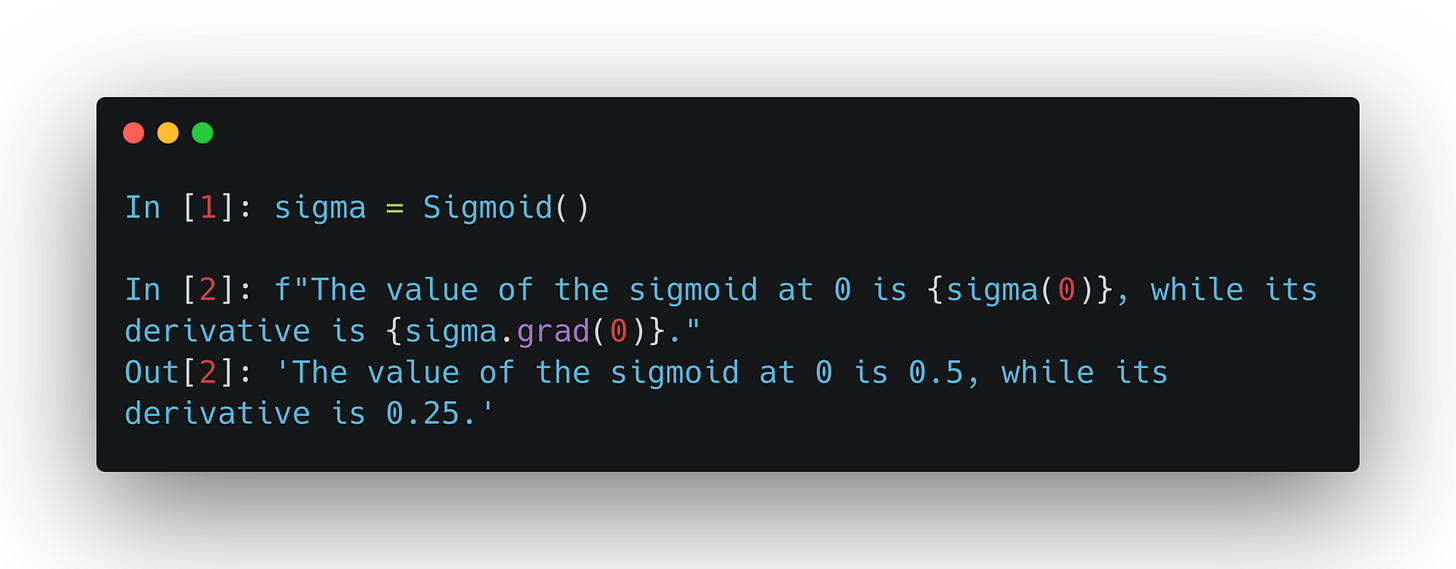

Python allows us to manage complexity via classes and methods. Let's see an example. Read the following snippet line by line.

(The method of sharing code via screenshot + GitHub gist was stolen from inspired by The Python Coding Stack • by Stephen Gruppetta. I read this Substack regularly, and I recommend it wholeheartedly to you as well.)

The class Sigmoid is an object-oriented representation of the sigmoid function, encapsulating all the essential sigmoid-related functionalities: calling the function and its derivative. Nothing more, nothing less.

Trust me when I say this: going from the procedural style to object-oriented is like firing up an FTL jump drive. As a math graduate student, learning object-oriented programming (OOP) supercharged my knowledge and performance when I started in machine learning.

A good design can go a long way, and in this chapter, we'll set the framework for all of the machine learning models, with PyTorch and scikit-learn as our inspirations. (scikit-learn for classical models, and PyTorch for neural networks.)